- cross-posted to:

- [email protected]

- [email protected]

- [email protected]

- cross-posted to:

- [email protected]

- [email protected]

- [email protected]

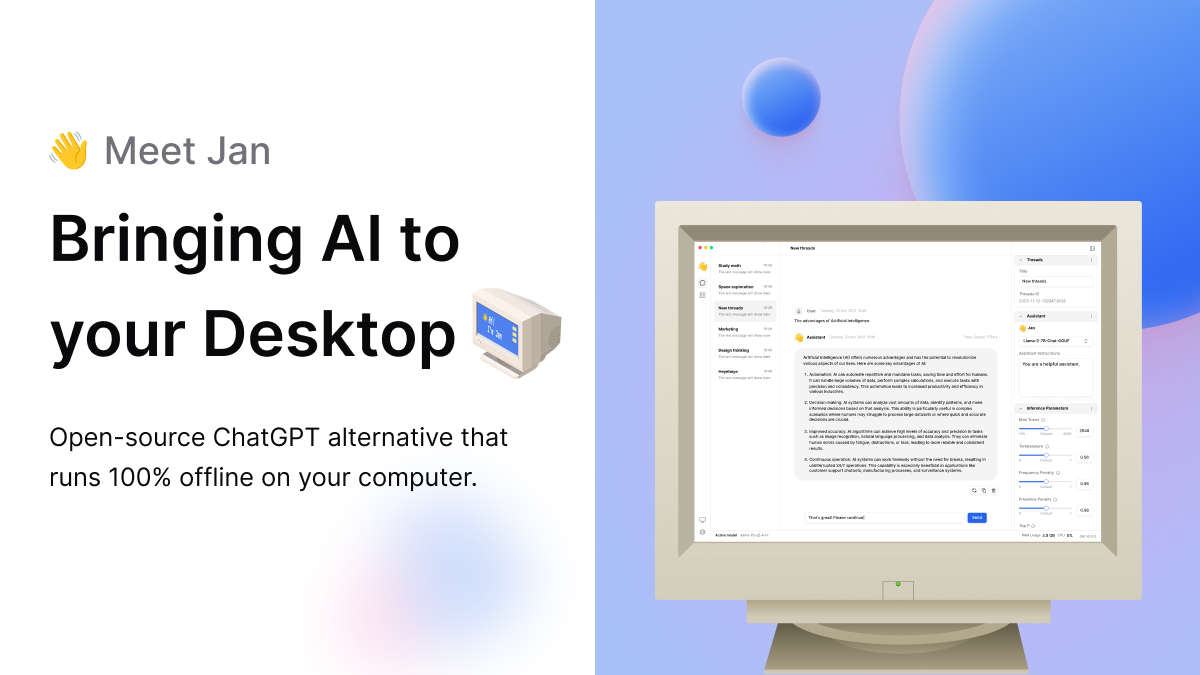

This isn’t really a significant player in the space yet. This field of local LLM frontends is extremely active right now, with many new projects popping up and user counts shifting around. Here’s a good article that reviews the most prominent ones right now, with rankings for different uses: https://matilabs.ai/2024/02/07/run-llms-locally/

TLDR from the article:

Having tried all of these tools, I find they are trying to solve for a few different problems. So, depending on what you are looking to do, here are my conclusions:

- If you are looking to develop an AI application, and you have a Mac or Linux machine, Ollama is great because it’s very easy to set up, easy to work with, and fast.

- If you are looking to chat locally with documents, GPT4All is the best out of the box solution that is also easy to set up

- If you are looking for advanced control and insight into neural networks and machine learning, as well as the widest range of model support, you should try transformers

- In terms of speed, I think Ollama or llama.cpp are both very fast

- If you are looking to work with a CLI tool, llm is clean and easy to set up

- If you want to use Google Cloud, you should look into localllm

- For native support for roleplay and gaming (adding characters, persistent stories), the best choices are going to be textgen-webui by Oobabooga, and koboldcpp. Alternatively, you can use ollama with custom UIs such as ollama-webui

I’ve been using GPT4All locally, and that does work pretty well. It’s worth noting that a lot of these will work with the same models. So, the difference is mostly in the UI ergonomics.

Good point. I’ve been dabbling with Khoj myself, which connects with Obsidian, the Markdown notemaking software I use. This lets the AI draw from my notes on the fly.

I’m waiting to build a PC with the RTX 5090 or something before diving deeper into local AI. The integrated GPU I’m using right now is just too slow.

That’s a pretty neat use case. And yeah dedicated GPU is kind of a must for running locally.