Hello shavers and shavettes,

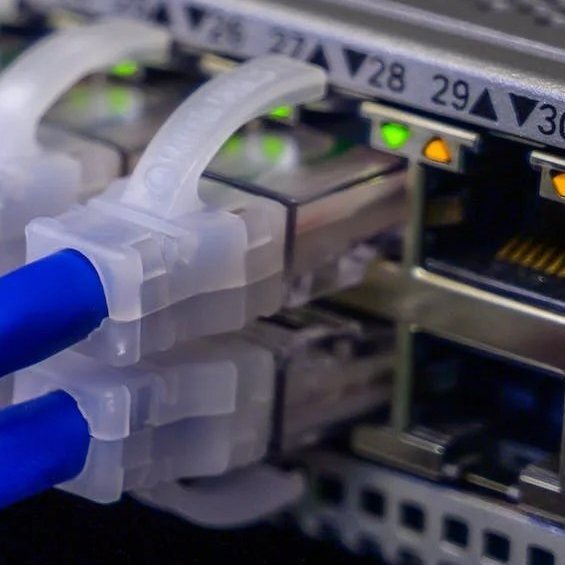

Things have been pretty stable on the hosting side of things, which is great! There was a little hiccup with the auto-post bot a little while ago but I was able to get it back up and running without much hassle.

Today I’ve been working on the picture hosting side of our Lemmy instance. If you weren’t aware, you can upload pictures here, and they get saved in Cloudflare S2 object storage. Not just uploaded photos, but thumbnails, etc, get saved there. Currently that uses about 16.5 GB of storage.

One concern I’ve had is potential abuse of the photo storage. Bad actors can upload whatever they want, including illegal stuff.

I’ve thought about disabling photo uploads requiring the use of other hosting options like imgur.com, but that’s no fun. The other option is what we’re going with right now, which is software called “fedi-safety”.

I have it up and running on my home server for testing, and will transfer it to the main Lemmy server once I have it figured out, but right now all photos uploaded here have been scanned and deleted if deemed “bad”. The tool has a high false-positive rate, because it errs on the side of caution. It’s not perfect, but it is preferable to the alternative.

That’s all for now. Happy shaving.

-The Admins

I thought I needed a GPU, but it turns out that’s only if you want it to scan things before it ever makes it to storage. It can detect using CPU but it’s not fast enough to catch things during upload. Instead it scans for new things every 10 seconds or so.

@walden @[email protected] Ah, that’s good to know! Noice!

Well, no matter what I try I can’t get it to work on the server. It works in my homelab which has direct access to some CPU cores but no GPU (not even intel graphics), but when I run it on the host server it spikes the CPU to 100%, crashes, and starts the cycle over again.

I’ll keep it running locally for now, as it’s a pretty small service overall.